Difference between revisions of "Strategic Initiatives TSC Dashboard"

| Line 55: | Line 55: | ||

***Yellow: 3-4 ballots | ***Yellow: 3-4 ballots | ||

***Red: 5+ ballots | ***Red: 5+ ballots | ||

| + | ***[http://gforge.hl7.org/gf/download/frsrelease/995/10408/Cycles_to_Normative_by_wg_with_ANSIDate.xlsx 2013May Release] | ||

***[http://gforge.hl7.org/gf/download/frsrelease/923/9596/Cycles_to_Normative_by_wg_with_ANSIDate.xlsx 2012Sep Release] includes trend on the average of cycles to reach normative status and ANSI publication. (T1 = Jan-May, T2 = May-Sep, T3 = Sep-Jan) - | ***[http://gforge.hl7.org/gf/download/frsrelease/923/9596/Cycles_to_Normative_by_wg_with_ANSIDate.xlsx 2012Sep Release] includes trend on the average of cycles to reach normative status and ANSI publication. (T1 = Jan-May, T2 = May-Sep, T3 = Sep-Jan) - | ||

| + | ***2013T1 2013Jan-May average -> 1.7 = Green light | ||

***2012T3 2012Sep-2013Jan average -> #DIV/0! (no ANSI publications this period so far) | ***2012T3 2012Sep-2013Jan average -> #DIV/0! (no ANSI publications this period so far) | ||

***2012T2 May-Sep average -> 1.33 = Green light | ***2012T2 May-Sep average -> 1.33 = Green light | ||

Revision as of 13:46, 4 May 2013

Contents

HL7 Strategic Initiatives Dashboard Project

Project Scope: The scope of this project to implement a dashboard showing the TSC progress on implementing the HL7 Strategic Initiatives that the TSC is responsible for. The HL7 Board of Directors has a process for maintaining the HL7 Strategic Initiatives. The TSC is responsible for implementing a significant number of those initiatives. As part of developing a dashboard, the TSC will need to develop practical criteria for measuring progress in implementing the strategic initiatives. There are two aspects to the project. First is the process of standing up the dashboard itself. This may end up being a discrete project handed off to Electronic Services and HQ. That dashboard would track progress towards all of HL7's strategic initiatives (not just those the TSC is responsible for managing.) The second part of this project will be focused on the Strategic Initiatives for which the TSC is responsible.

In discussion at 2011-09-10_TSC_WGM_Agenda in San Diego, a RASCI Chart showing which criteria under the Strategic Initiatives the TSC would take responsibility for.

From those, the TSC accepted volunteers to draft metrics with which to measure the TSC criteria.

Industry responsiveness and easier implementation

- Draft Strategic Dashboard criteria metrics recommendations from Mead for: Industry responsiveness and easier implementation

- Transition to Mead

- Staff support: Dave Hamill

Requirements traceability and cross-artifact consistency

- Tracker #2059 Austin will create Strategic Dashboard criteria metrics for: Requirements traceability and cross-artifact consistency

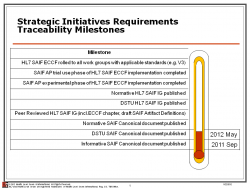

- Requirements traceability - Tied to HL7 roll out of SAIF ECCF. measure is the number of milestones completed

- Informative SAIF Canonical document published

- DSTU SAIF Canonical document published

- Normative SAIF Canonical document published

- Peer Reviewed HL7 SAIF IG including ECCF chapter and draft SAIF Artifact Definitions

- DSTU HL7 SAIF IG published

- Normative HL7 SAIF IG published

- SAIF Architecture Program experimental phase of HL7 SAIF ECCF implementation completed

- SAIF AP trial use phase of HL7 SAIF ECCF implementation completed

- HL7 SAIF ECCF rolled to all work groups working on applicable standards (V3 primarily)

- Cross-artifact consistency

- (per TSC decision 2012-03-26)

- Standards that include V3 RIM-Derived information models.

- Percent of Work groups in FTSD, SSDSD and DESD participating in RIM/Vocab/Pattern Harmonization meetings, calculated as a 3 trimester moving average - Green of 75% or better participation. Yellow 50-74%. 0-49 is red.

- Other metrics considered

- Survey of newly published standards via the publication request form

- V3 Standards - Measure - Green: All new standards have one or more of the following checked; Yellow: 1-2 standards have none of the following checked; Red: 3 or more standards have none of the following checked

- Standard uses CMETs from HL7-managed CMETs in COCT, POCP (Common Product) and other domains

- Standard uses harmonized design patterns (as defined through RIM Pattern harmonization process)

- Standard is consistent with common Domain Models including Clinical Statement, Common Product Model and "TermInfo"

- V3 Standards - Measure - Green: All new standards have one or more of the following checked; Yellow: 1-2 standards have none of the following checked; Red: 3 or more standards have none of the following checked

- (per TSC decision 2012-03-26)

- Austin notes that these are not necessarily automated metrics for the criteria.

- Requirements traceability - Tied to HL7 roll out of SAIF ECCF. measure is the number of milestones completed

- Staff Support: Lynn Laakso

Product Quality

- Tracker #2058 Woody will create Strategic Dashboard criteria metrics for: Product Development Effectiveness

- Measure is the average number of ballot cycles necessary to pass a normative standard. Calculated as a cycle average based on publication requests. Includes:

- (Note: This metric uses "ballots" rather than ballot cycles because more complex ballots frequently require two-cycles to complete reconciliation and prepare for a subsequent ballot.)

- Projects at risk of becoming stale 2 cycles after last ballot

- Two cycles since last ballot but not having posted a reconciliation or request for publication for normative edition

- items going into third or higher round of balloting for a normative specification;

- (Note: This flags concern while the process is ongoing; it is not necessarily a measure of quality once the document it has finished balloting).

- Green: 1-2 ballots

- Yellow: 3-4 ballots

- Red: 5+ ballots

- 2013May Release

- 2012Sep Release includes trend on the average of cycles to reach normative status and ANSI publication. (T1 = Jan-May, T2 = May-Sep, T3 = Sep-Jan) -

- 2013T1 2013Jan-May average -> 1.7 = Green light

- 2012T3 2012Sep-2013Jan average -> #DIV/0! (no ANSI publications this period so far)

- 2012T2 May-Sep average -> 1.33 = Green light

- 2012T2 Jan-May average -> 2.71 = Yellow light?

- Past 1 year average -> 1.35 = Green light

- Measure is the average number of ballot cycles necessary to pass a normative standard. Calculated as a cycle average based on publication requests. Includes:

- Publishing will also create a "Product Ballot Quality" metric (at the level of the product, but generated for each Ballot) that creates a green/yellow/red result by amalgamating specific elements such as:

- Content quality, including:

- ability to create schemas that validate (metric of model correctness) [where failure could have been prevented by the product developer],

- the presence of class- and attribute-level descriptions in a static model (metric of robust documentation)

- Content quality, including:

- Staff Support: Lynn Laakso

WGM Effectiveness

- Tracker #2057 was created to document the Strategic Dashboard criteria metrics for: WGM Effectiveness

- Draft Criteria

- % of WG’s attending

- % of WG’s who get agenda posted in appropriate timeframe

- % of WG’s who achieve quorum at WGM

- Number of US-based attendees

- Number of International attendees

- Number of non-member attendees

- Profit or Loss

- Nbr of FTAs

- Nbr of Tutorials Offered

- Nbr Students Attending Tutorials

- Nbr of Quarters that WG's had agenda for; but did not make quorum

- For US-Based Locations: one set of %’s for success

- For International Locations: a different set of %’s for success

- Metrics may also be based on each WG's prior numbers

- Draft Criteria

- Staff Support: Dave Hamill

- TSC Liaison: Jean Duteau

Dashboard

| Industry responsiveness and easier implementation | Requirements Traceability and cross-artifact consistency |

Product Quality | WGM Effectiveness |

|

SI Project assessment

|

Requirements traceability |

Product Development Effectiveness

Key:

|

WGM Effectiveness- U.S. meetings If you have trouble with the above file (a .DOC file opens instead of a .XLS file), the same information can be found via Jan 2013 Draft Metrics. The main updates from January 2013 are the next steps for Jean Duteau and Dave Hamill:

|

|

TBD |

Cross-Artifact Consistency

Harmonization participation: 44.12% rolling average 2012= RED |

Product Ballot Quality |

WGM Effectiveness - International meetings

|